Group Calibration Sessions

Group calibrations allow multiple calibrators to review conversations together and reach a consensus, creating a "gold standard" evaluation that can be compared against the AI.

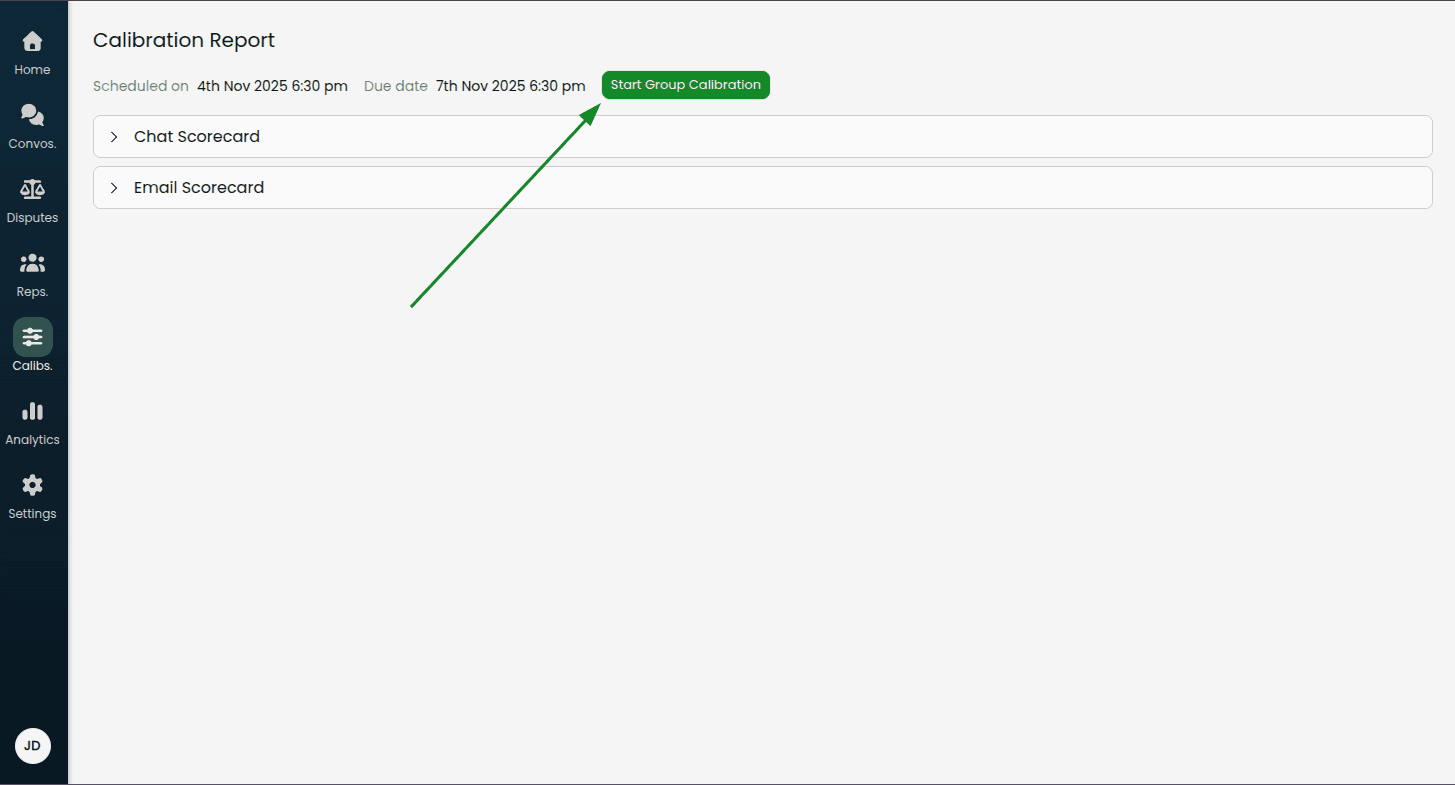

Starting a Group Calibration

From a calibration report, click Start Group Calibration

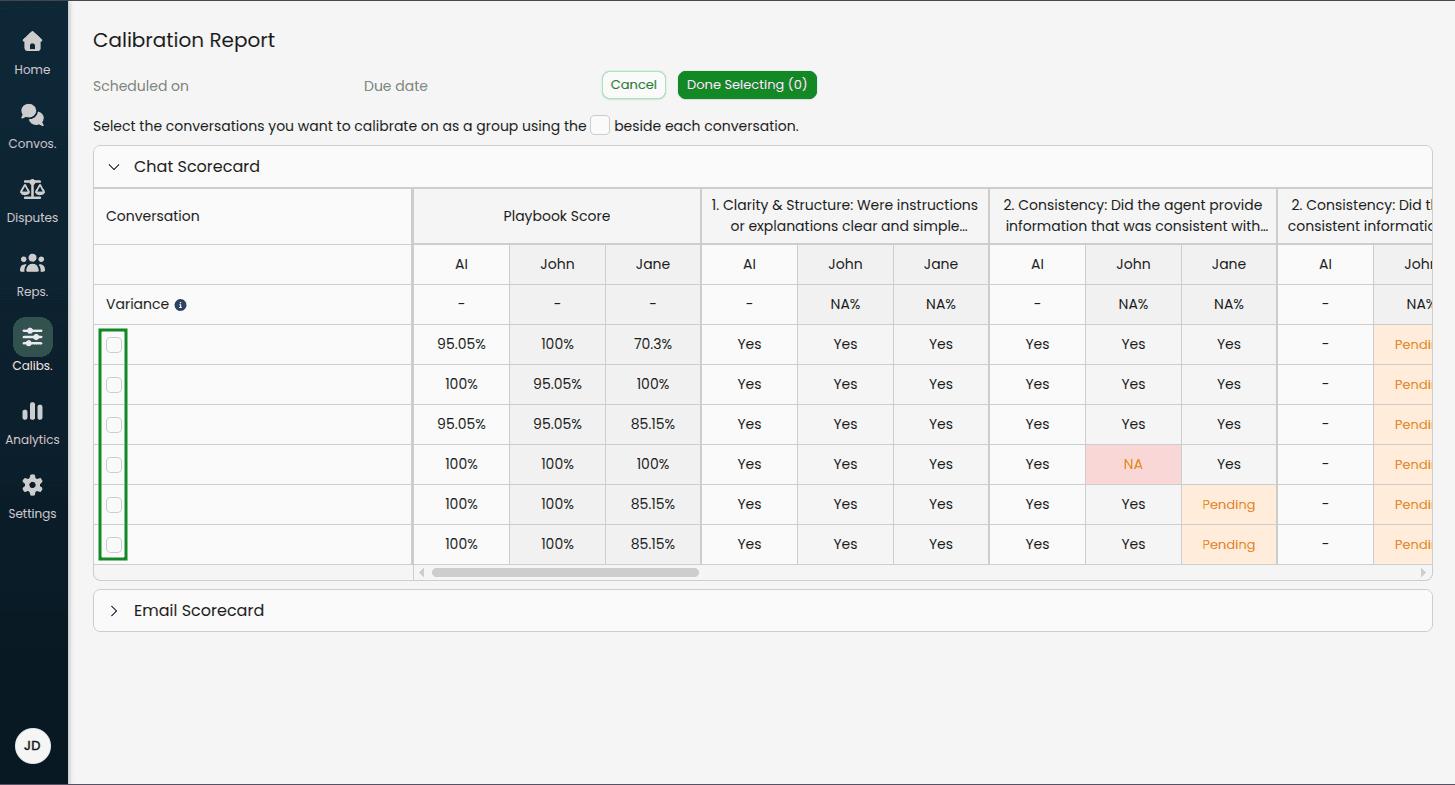

Select the conversations you want to calibrate as a group using the checkboxes

Click Done Selecting

Note: You can change the selected conversations later if needed by clicking "Change Conversations".

Completing a Group Calibration

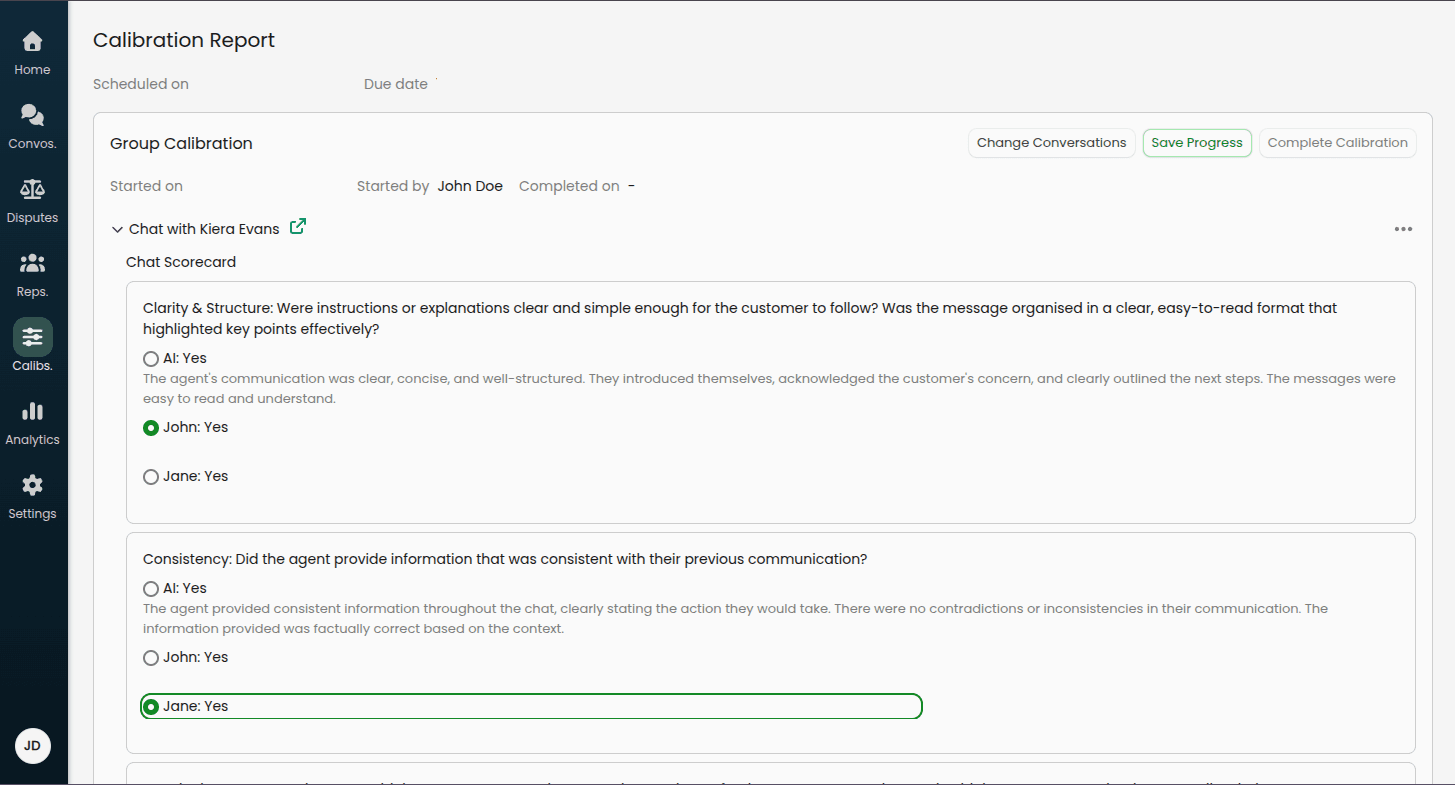

Once started, the Group Calibration section appears at the top of the report.

Review Process

For each selected conversation:

Expand the conversation in the accordion

Review each playbook and criterion

Select the correct evaluation from the available options:

AI's evaluation

Each calibrator's evaluation

Consensus Selection

Radio buttons display:

"AI: [value]" - The AI's evaluation

"[Calibrator Name]: [value]" - Each calibrator's evaluation

Any remarks provided by the AI or calibrator

Select the option that the team agrees represents the correct evaluation.

Progress Tracking

Each conversation shows a checkmark icon when all criteria have been reviewed and consensus values selected.

Saving and Completing

Save Progress: Saves your current selections without completing the calibration (you can return later)

Complete Calibration: Finalizes the group calibration (only enabled when all conversations are complete)

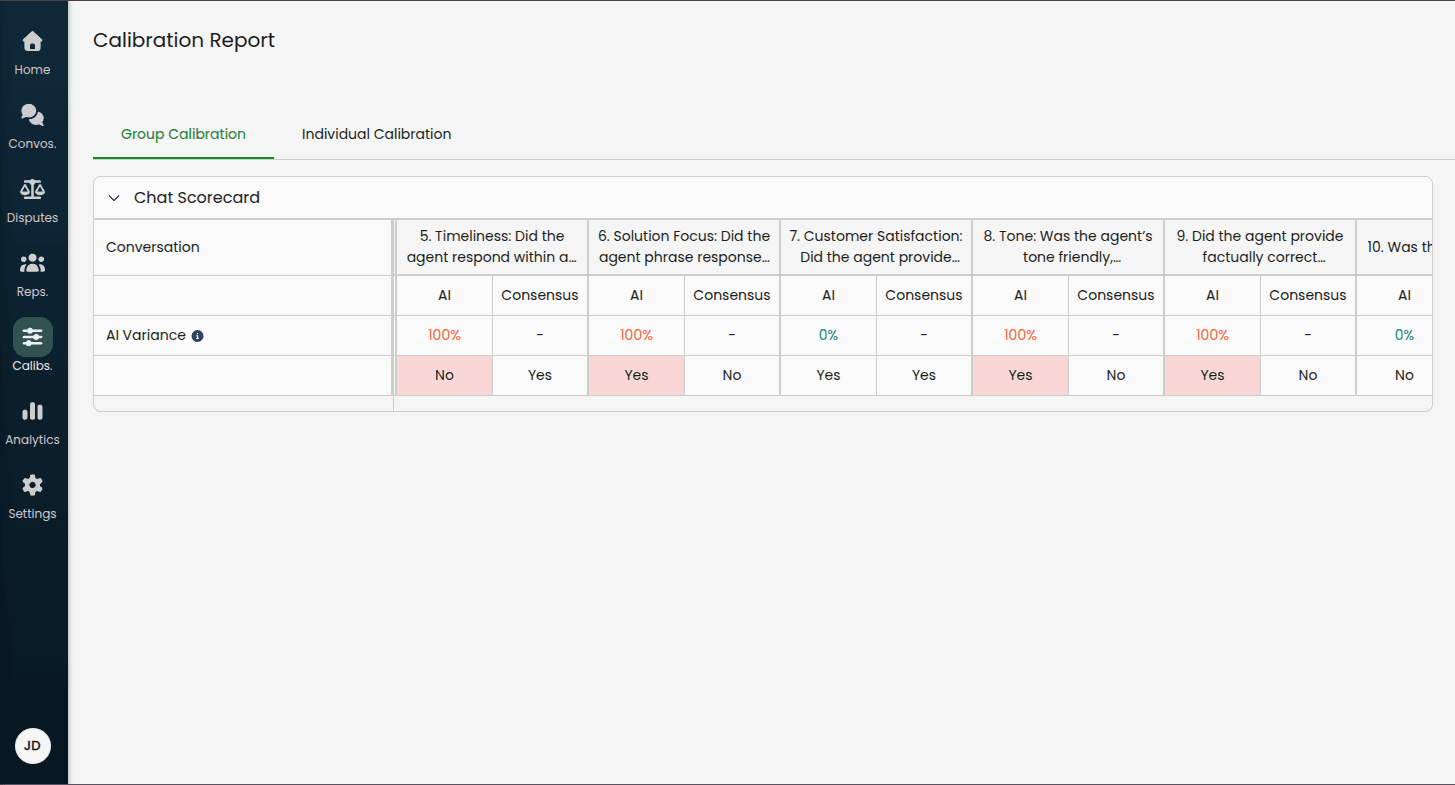

Group Calibration Report View

After completing a group calibration, the report switches to a tabbed view:

Group Calibration Tab

Displays a table comparing AI evaluations vs. consensus values:

AI Column: The AI's original evaluation

Consensus Column: The group's agreed-upon correct evaluation

AI Variance Row: Shows percentage of disagreements between AI and consensus

Highlighted cells (red/pink background) indicate where the AI differed from the consensus.

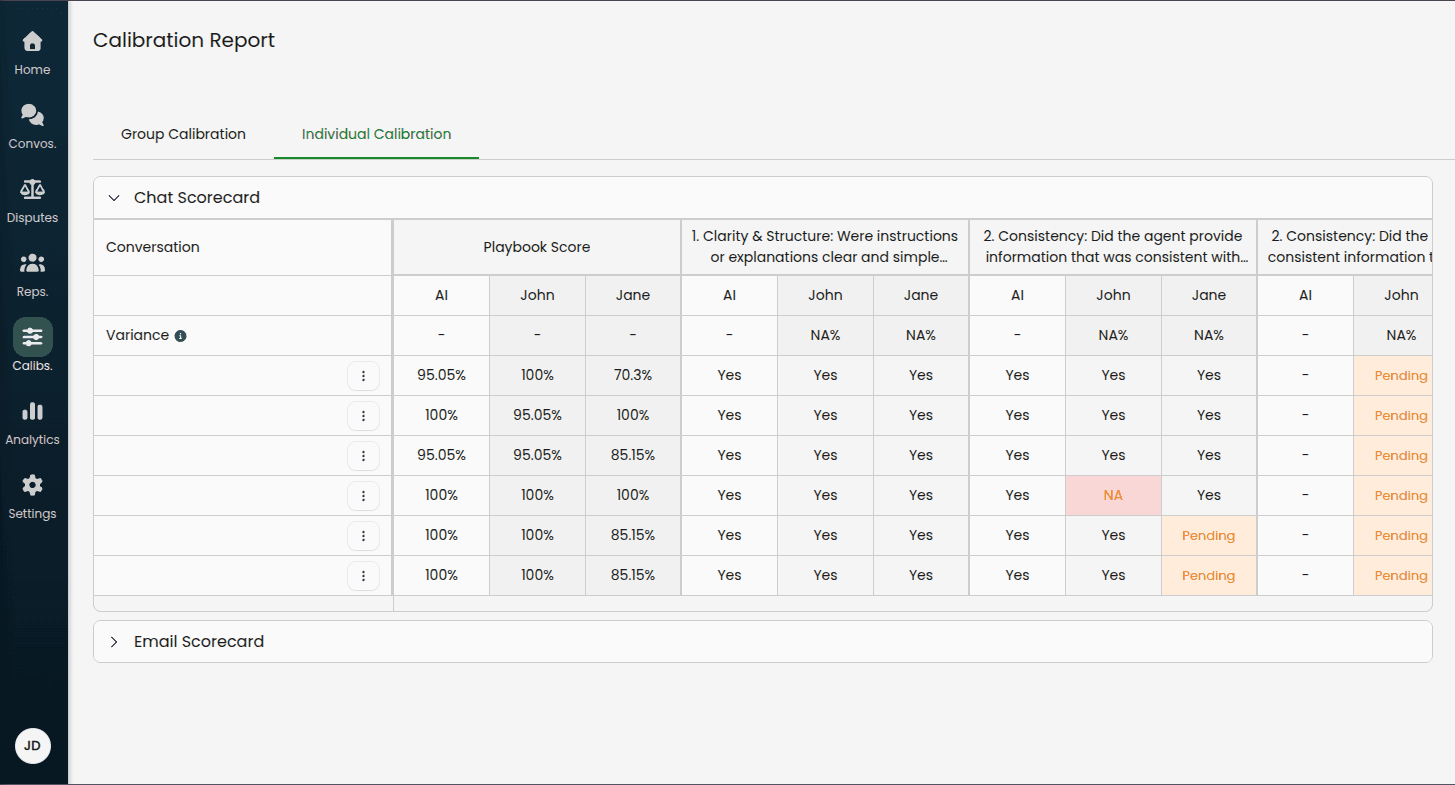

Individual Calibration Tab

Shows the original individual calibration table with all calibrators' evaluations.

Using Group Calibration Insights

The completed group calibration provides valuable data:

High AI variance on specific criteria may indicate:

The playbook criterion needs clearer definition

The AI needs retraining on edge cases

The criterion might be too subjective for consistent evaluation

Use these insights to:

Update playbook descriptions and examples

Create test cases from consensus evaluations

Train the AI on the consensus values

Adjust scoring weights if needed

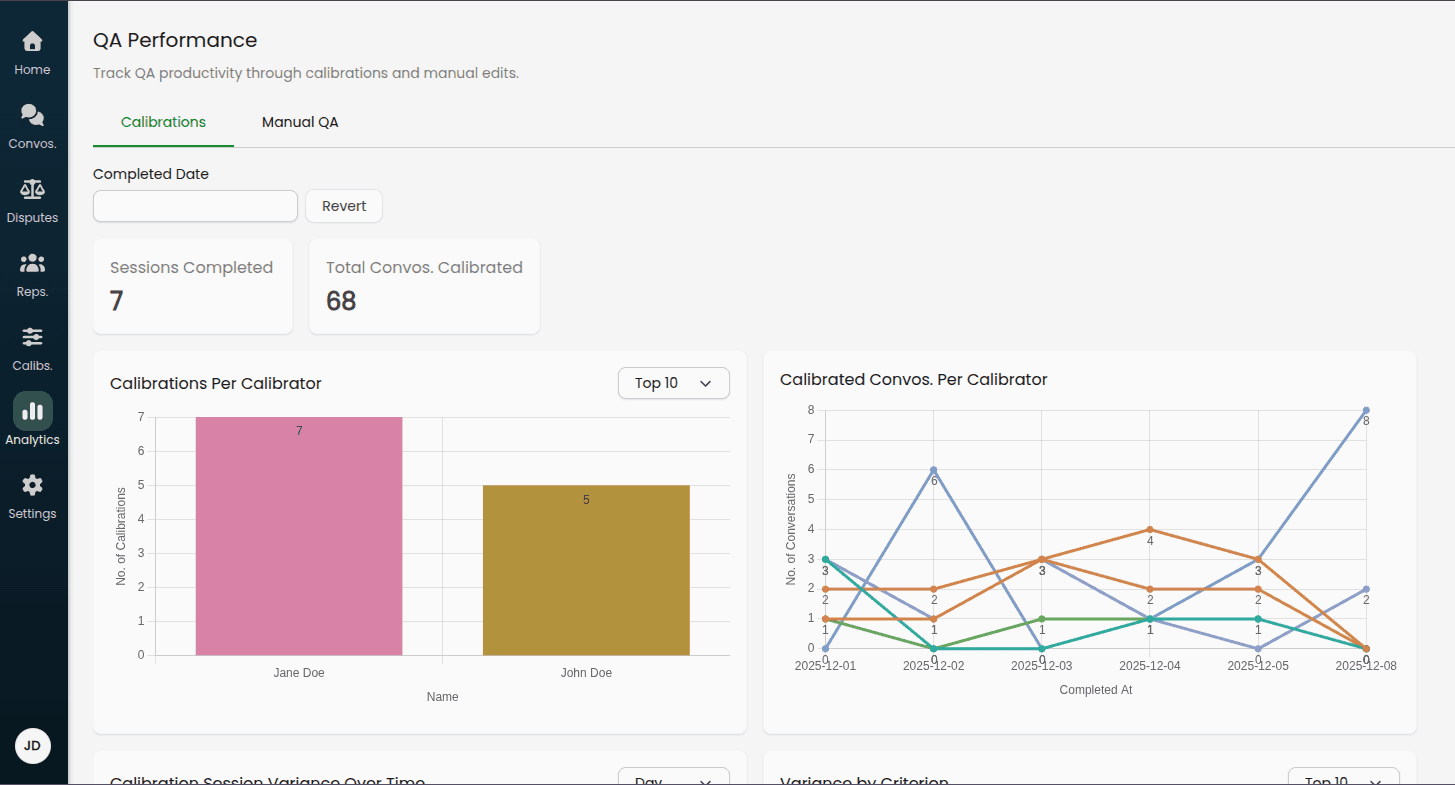

Analytics & Performance Tracking

Monitor your calibration program's effectiveness through the Analytics dashboard.

Accessing Calibration Analytics

Navigate to Analytics > QA Performance > Calibrations

Filtering Data

Use the Completed Date filter to analyze specific time periods:

Last 7 days

Last 30 days

Custom date ranges

Interpreting the Data

Healthy calibration programs typically show:

Consistent weekly or daily completion rates

Gradual decrease in variance over time (as playbooks improve)

High completion rates relative to scheduled sessions

Warning signs to watch for:

Multiple missed or overdue sessions

Increasing variance over time

Single calibrator completing all reviews (should be distributed)

Best Practices

Starting Your Calibration Program

Week 1-2: Pilot Phase

Add 2-3 calibrators initially

Sample 5-10 conversations per session

Run daily sessions to build momentum

Focus on reviewing results together as a team

Week 3-4: Establish Rhythm

Adjust sample size based on calibrator capacity

Fine-tune frequency (daily vs. weekly)

Begin identifying common variance patterns

Start making playbook improvements based on findings

Month 2+: Optimize

Run regular group calibrations for complex cases

Build test case library from calibrated conversations

Track variance trends over time

Expand calibrator team if needed

Calibrator Selection

Good calibrator candidates:

Deep understanding of your quality standards

Consistent, reliable judgment

Available time to complete reviews on schedule

Able to provide constructive feedback

Tips:

Start with 2-3 calibrators to keep sessions manageable

Rotate calibrators periodically to avoid bias

Ensure calibrators represent different teams/perspectives

Sampling Strategy

Balanced sampling considers:

Conversation scores: Include both high and low scoring conversations

Time of day: Morning, afternoon, and evening interactions

Channels: Calls, emails, chats in proportion to your volume

Representatives: All active representatives unless excluded

The system automatically applies these weights, but you can adjust the sample size to focus more deeply on specific patterns.

Responding to High Variance

When you see high variance between AI and calibrators:

1. Review the Specific Disagreements

Click into cells to read remarks

Look for patterns (same criterion, same type of conversation, etc.)

2. Diagnose the Root Cause

Is the criterion description unclear?

Is this an edge case not covered in the playbook?

Do calibrators need training on this criterion?

Is the AI missing context?

3. Take Action

Unclear criteria: Rewrite playbook descriptions with specific examples

Edge cases: Document them in the playbook or add to test cases

Training needs: Share calibration results in team meetings

AI issues: Create test cases to train the AI on correct evaluations

4. Measure Improvement

Track variance percentage over time

Expect gradual decrease as playbooks improve

Celebrate wins when variance drops below 10%

Group Calibration Best Practices

When to use group calibration:

Complex edge cases that need discussion

High-variance criteria needing clarification

Training new calibrators

Quarterly deep-dives into playbook accuracy

How to run effective sessions:

Review individually first: Have calibrators complete individual reviews before the group session

Focus discussion: Only discuss items with disagreement

Document decisions: Use remarks to explain why consensus was reached

Update playbooks: Immediately update criteria based on group discussions

Create tests: Add consensus conversations to test suites

Frequency recommendations:

Monthly group calibrations for mature programs

Weekly during playbook development or major changes

Ad-hoc for specific training needs

Maintaining Momentum

Keep calibrators engaged:

Share insights from calibration data in team meetings

Recognize calibrators who complete reviews on time

Demonstrate playbook improvements driven by their feedback

Rotate focus areas (different playbooks, criteria) to maintain interest

Avoid calibration fatigue:

Don't over-sample (10-20 conversations per session is usually sufficient)

Vary the conversation types in samples

Provide adequate time for completion (3-7 days)

Automate reporting and recognition where possible

Continuous Improvement Cycle

Calibrate: Run regular sessions per your schedule

Analyze: Review variance metrics and specific disagreements

Improve: Update playbooks, train calibrators, adjust AI

Validate: Create test cases to prevent regression

Repeat: Continue the cycle with decreasing variance over time

Success metrics to track:

Variance trends (decreasing over time)

Calibrator completion rates (consistently high)

Test case pass rates (improving over time)

QA team confidence scores (via surveys)